Mastering Object Streaming with the new Vercel AI SDK for Enhanced UX

Get to know how object streaming works with Vercel's AI SDK. From setting up a schema to displaying data in real-time, enhance your platform's user experience.

Imagine if you had a tool that could instantly generate innovative ideas for software or even entire tools, designed specifically to attract attention and encourage links to your website. Sound too good to be true? It's not. In fact, it's now a reality.

Yesterday, I unveiled a powerful, free AI tool on BacklinkGPT.com. This tool not only generates 10 unique ideas or software tools to serve as link bait but also presents them in an easily digestible format. The term 'link bait' refers to any feature or content on a website that's specifically designed to draw attention or encourage others to link to the website.

The timing was perfect when I started building the tool, as Vercel had just released their new AI SDK a few days prior. The SDK reduces a lot of Boilerplate and helps you with some technicalities around encoding and decoding text as well as getting the edge functions to work seamlessly through some custom hooks.

While the documentation makes streaming normal text relatively straightforward, it does not thoroughly explain the process of streaming objects and handling them in the frontend. However, the process of streaming objects and handling them in the frontend is not well-explained. So, I'd like to share what I've learned about how you can stream objects using both LangchainJS and the Vercel AI SDK.

Why stream objects in the first place?

When I began designing the tool, I envisioned displaying all of the products as cards in a list rather than presenting them as one large chunk of text, which can be unpleasant to read.

For instance, creating a website summary as a single large block of text is acceptable because it's all logically connected to a single topic — such as the website summary of backlinkgpt.com.

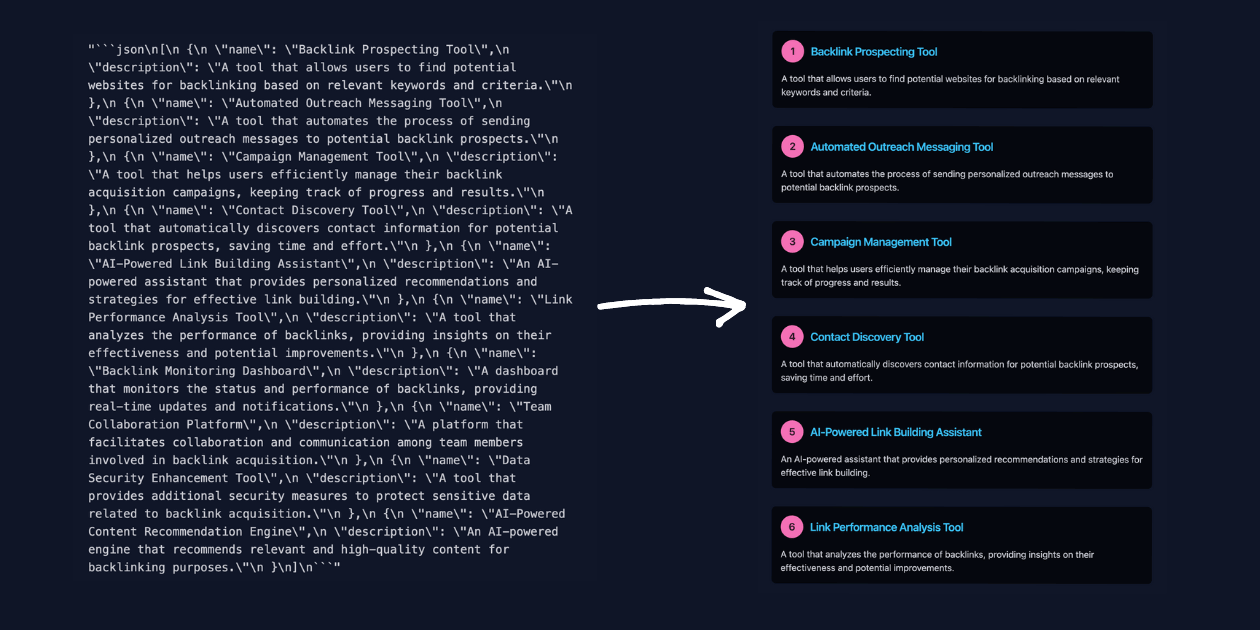

However, presenting 10 different ideas as a single, large chunk of text would be unappealing and difficult to read. Furthermore, creating objects allows you to incorporate more details into some of the generated responses, such as labels, numeric scores, or other potentially helpful elements.

The final design I arrived at looked something like this:

How to stream objects?

To start, I used the Structured Output Parser with Zod Schema. Here's the schema that I defined for my tool, it's a simple array of javascript objects that each have a name and description key:

The main idea is to generate format instructions based on a Zod schema and pass these into the prompt via the formatInstructions variable. When GPT responds, it will hopefully follow these instructions, providing a predictable output that you can then parse.

For now I just tested my code with a simple array that has Javascript objects without any nested keys. If your schema is more complex the code is very likely to break and might need some further iteration.

Next, we would pass these formatInstructions and other variables into the prompt and directly stream the output back:

Once we've completed this process, we'll receive text snippets formatted like JSON strings, which will need to be parsed and pushed into an array of objects:

So how does this work in the client component?

Registering the useCompletion Hooks

First we need to set up the hooks by passing in the api and an optional body where I added the url .

Next, since I am using react-hook-from, I manually call the completeIdeas function inside the onSubmit function on line 28:

Parsing text into javascript objects

The last part proved to be the most challenging: parsing the individual text fragments back into JavaScript objects. After receiving substantial assistance from GPT-4, I managed to abstract these processes into two hooks.

The useBuffer is a React hook that accepts a string chunk and returns a cleaned version of it. It employs useState to manage a buffer state and useEffect to execute side effects whenever chunk changes.

In the useEffect block, chunk is stripped of JSON markdown syntax, newline characters are replaced with spaces, leading and trailing square brackets are removed, and excess whitespace is trimmed. The updated string then sets the buffer state. The buffer, which holds the parsed chunk, is finally returned by the hook. This hook is ideal for handling and presenting code chunks in a cleaner format within a UI.

The useUniqueJsonObjects is a custom React hook that parses a string buffer, looking for JSON objects. It then stores these objects in an array, ensuring each one is unique.

It uses useState to maintain an objects state, initially an empty array. The addObject function, wrapped with useCallback for memoization, checks if an object already exists in objects. If not, it adds it to the state.

The useEffect block processes buffer, continually matching and parsing JSON objects within the string. For each valid JSON object, it invokes addObject and trims the processed part from buffer.

The hook returns the objects array, which contains all unique JSON objects found in buffer.

And that's it! By using these two hooks, we avoid waiting for the entire JSON object to be parsed and returned from the edge function or for the client to parse the whole object. Instead, we display each new object on the fly, significantly improving the user experience.